How to Measure Development Productivity?

The issue that every engineering leader with experience has to deal with when asked, “How productive is our development team?” is accurately reflected in the quote, “Not everything that can be counted counts, and not everything that counts can be counted” (William Bruce Cameron).

Imagine a CTO declaring that last month their team wrote 50,000 lines of code. At first blush, it looks impressive. But what if those 50,000 lines result in a month or more of rework and a larger amount of new defects than old features, as well as irate customers? The value wasn’t going up, only the code was.

This helps illuminate how nuanced and important it is to measure this productivity. It’s much more important to know results, flow, and the developer experience than it is to tally up the outflows. For long, measures of development productivity have been controversial. Software is knowledge work, and its measurement is not as simple as counting the number of gussets in a box, compared to manufacturing. For years, organizations relied on simple metrics like the number of hours logged or lines of code. Regrettably, such actions also tend to stifle collaboration and create perverse incentives.

Modern, responsible approaches recommend a more balanced system-level view that considers speed alongside the most obvious other factors: teamwork, quality, and the well-being of the developers. Nowadays, most of us tend to see frameworks and measures such as the SPACE, DORA, and Flow-based metrics as a way to measure productivity in meaningful ways.

| Key Takeaways: |

|---|

|

The Measuring Development Productivity Matters

- Business Alignment: A significant investment is made for each hour of development time. Organizations run the risk of misaligning what teams build with what customers or the business really need if they don’t measure. By offering visibility, metrics ensure that resources support business goals like higher revenue, reduced risk, or customer satisfaction.

- Continuous Enhancement: What you don’t measure, you can’t enhance. Productivity metrics give a starting point for enhancement and highlight bottlenecks (such as lazy code reviews, faulty tests, and manual release processes). If a team’s average lead time from commit to production is ten days, for example, they can validate workflow changes or automation and monitor progress over time.

- Risk Management: Productivity measurement also decreases risk. Delivery pipeline vulnerabilities are revealed by metrics such as MTTR or change failure rate. Early detection of these defects avoids costly outages and harm to one’s reputation.

- Developer Experience: Productivity is more than just speed and output. Productivity is directly affected by developer experience, including workflows, tools, and satisfaction, as high-performing organizations are aware. A team that is obstructed by manual processes or ambiguous requirements is less creative and more likely to burn out.

To put it in simpler terms, development productivity measurement is critical because it aligns engineering with business goals, encourages continuous improvement, controls risk, and enhances working conditions.

Common Mistakes in Measuring Developer Productivity

Over-reliance on Vanity Metrics

Commit counts, code lines, and closed tickets are often misinterpreted as indicators of productivity. In reality, these metrics promote the wrong behavior. Developers may rush low-value fixes to inflate numbers or write verbose code in place of efficient and successful solutions.

Measuring Individuals Instead of Groups

The process of developing software requires teamwork. Teamwork is weakened when people are rewarded or punished based on personal metrics. Because pair programming and code reviews, for example, “don’t count” toward individual output, a developer might steer clear of them. At the team or system level, try to see how productivity displays itself.

Ignoring Quality and Results

Only speed is calculated by some organizations. Fast delivery, however, is useless if features don’t function as expected or don’t respond to customer issues. Technical debt is a direct result of ignoring quality, which eventually delays teams.

Failing to Contextualize Metrics

Metrics are not absolute. In a highly regulated industry, a team that deploys once a week may be more productive than a startup that deploys multiple times a day. Metrics are misleading in the absence of context.

Using Metrics as a Stick, not a Compass

Teams may game the system or completely oppose measurement when metrics are directly connected to performance reviews. Metrics should be used to steer progress rather than to penalize individuals.

Frameworks for Measuring Development Productivity

DORA Metrics

- The frequency of deployments highlights a team’s agility and self-assurance in deploying changes.

- The lead time for changes displays how well code is transferred from development to production. Long lead times are often an indication of testing or review hurdles.

- Stability is determined by the failure rate. Developers who have a high failure rate spend more time addressing problems than building new value.

- The capability to quickly restore service following a failure is measured by mean time to recovery, or MTTR.

As per the DORA’s research, these metrics are a good place to start because they indicate a correlation with both organizational performance and business success.

The SPACE Framework in Depth

- Regular surveys and feedback can be used to calculate satisfaction.

- Business KPIs, such as feature adoption, may be included in performance.

- Activity monitors work that is visible, but they should never be utilized in isolation.

- Participation in code reviews and cross-team dependency resolution are two clear ways to assess collaboration.

- Efficiency and Flow emphasize how critical it is to focus, reduce context switching, and cut down on waiting.

Flow and Value Stream Metrics in Depth

- High WIP indicates over-the-top multitasking.

- QA and approval phases often involve lengthy wait times.

- Low flow efficiency highlights that most of the time is spent waiting rather than working.

A systems view of productivity can be gained by mapping the value stream (idea-> code -> test -> deploy -> customer feedback).

Business Metrics in Depth

- The rate at which new features are embraced.

- How features impact revenue or cost savings.

- A reduction in customer attrition linked to product enhancements.

Balancing Speed, Quality, and Developer Experience

Productivity cannot be fully captured by a singular metric. Quality may suffer if speed is the only factor to consider. Delivery may be delayed by an excessive focus on quality. In addition, ignoring developer experience may lead to attrition.

Leading indicators (such as PR size, work in progress, and review time) and lagging indicators (like DORA results and defect rates) are integrated in a balanced manner. When combined, these provide a more accurate and meaningful perspective.

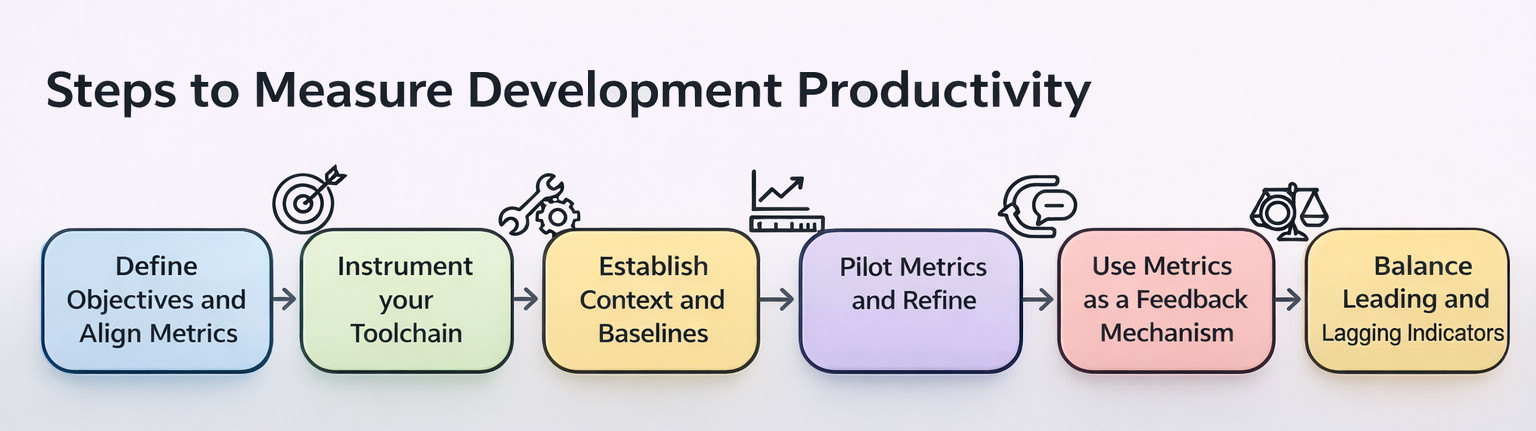

Practical Steps to Measure Development Productivity

- Define Objectives and Align Metrics

Start by defining the purpose of your productivity measurement. Is it to boost developer experience, quality, or speed of release? Different metrics are required for different goals. For example, enhancing incident response procedures may be needed to reduce MTTR, whereas automating tests and reviews may be the main component to decreasing lead time.

- Instrument your Toolchain

Data is already delivered by development systems, such as ticketing, CI/CD, monitoring, and SCM platforms. Accuracy is ensured without generating extra overhead by integrating these sources. For instance, Real-time lead time and deployment frequency are offered by CI/CD data.

- Establish Context and Baselines

Measure your current situation. Your baseline would be a weekly deployment frequency. You assess your progress against yourself, not other people. Results should be contextualized by taking project complexity, industry, and team size into consideration.

- Pilot Metrics and Refine

Begin small. For one or two teams, monitor a few key performance indicators. Engage the teams in assessing the results. If the metrics have unexpected effects (like stress or gaming), adjust them.

- Use Metrics as a Feedback Mechanism

Initiatives for continuous improvement and retrospectives should be directed by metrics. For example, the team may utilize pair programming or smaller PRs to accelerate the process if the cycle time is high because of lengthy code reviews.

- Balance Leading and Lagging Indicators

Early warning signs of trouble are displayed by leading indicators like review time, work in progress, and PR size. Defect rates and MTTR are instances of lagging indicators that reveal results after the fact. When combined, they provide a complete picture.

The Role of Software Testing in Developer Productivity

- Bottlenecks in Traditional Testing: Releases are often delayed by days or weeks due to manual regression cycles. As a direct result, deployment frequency is brought down and lead time is boosted. Along with frustrating developers, testing hurdles can cause them to wait for days together to receive QA feedback.

- Using Quality to Boost Productivity: Inadequate testing increases MTTR and change failure rates. Developers spend more time firefighting and less time developing new functionalities when there are production problems. Good testing accelerates delivery and minimizes rework.

- Automation as a Necessity: For businesses striving to boost productivity, automated testing is now needed. Continuously executing integrated test suites detects issues early and reduces the cost of defect correction.

- AI-Driven Testing: Maintenance is still needed for conventional automation. By reducing test flakiness and simplifying test authoring, AI-powered solutions like testRigor take it a step further. By doing this, flow efficiency is directly boosted, feedback loops are reduced, and productivity metrics (like lead time and failure rate) are ensured to reflect reality rather than being altered by faulty testing.

- Linking Testing to Productivity Frameworks:

- Lead time, deployment frequency, and change failure rate are all directly affected by testing in DORA.

- In SPACE, testing has an impact on efficiency (reduced bug disruptions), collaboration (shared ownership of quality), and satisfaction (decreased frustration).

Automated testing increases Flow metrics by reducing waiting times.

Future Trends in Measuring Development Productivity

- AI Coding Assistants: Tools like GitHub accelerate coding, Copilot has the potential to rethink productivity, but it will also require new methods of measuring its value.

- Developer Experience Metrics: DevEx is becoming more and more recognized by organizations as a quantifiable source of productivity.

- Test Automation as a Standard Pillar: For businesses that are serious about productivity, AI-powered testing will become mandatory.

- System-Level. Not Individual Level Measurement: The industry is shifting its focus from assessing the output of individual developers to assessing the performance of entire systems.

- Predictive Analytics and Leading Indicators: Predictive analytics will be utilized by organizations to detect early warning signs rather than waiting for lagging indicators.

- Alignment with Business Value Streams: Engineering metrics will no longer be the only method to measure productivity in the future. Rather, it will be closely connected to business results, the speed at which concepts become valuable to clients.

Conclusion

The key is to use metrics for improvement, not punishment, to link measurement to business outcomes, and to work on speed and quality. Even with modern ways to measure development productivity, you might still find it hard to assess this subjective parameter. Yet, with a more developer-friendly set of measurements, organizations can ensure a better understanding of their workforce and attain long-term success.

Additional Resources

- What are the Different Types of Code Smells?

- Top 10 Natural Language Processing Tools

- Data Science vs Software Engineering

- Which Version Control System is the Best?

- Different Merge Strategies in Git

|

|